Enterprise AI Platform

AI Adoption Challenges

Enterprise AI Adoption Challenges

- High Deployment Barriers & Complexity

Deploying various models is complicated, and lack of a unified platform leads to resource silos and performance inefficiencies. - Unpredictable Costs & High Maintenance

Traditional infrastructure struggles to scale or release resources flexibly, causing cost fluctuations. AI model compute and storage demand is high, making TCO hard to control. - Security & Data Privacy Risks

Training and inference require access to internal data. Without strict access control and isolation, data leaks or contamination can occur. AI supply chains and endpoints need protection to ensure secure and compliant deployment. - Developer & Data Scientist Barriers

Most developers lack LLMOps expertise, making model deployment and service integration inefficient. Lack of visual management tools and API interfaces slows implementation and limits innovation flexibility.

Nutanix Enterprise AI Advantages

Nutanix Enterprise AI Drives Enterprise AI Adoption

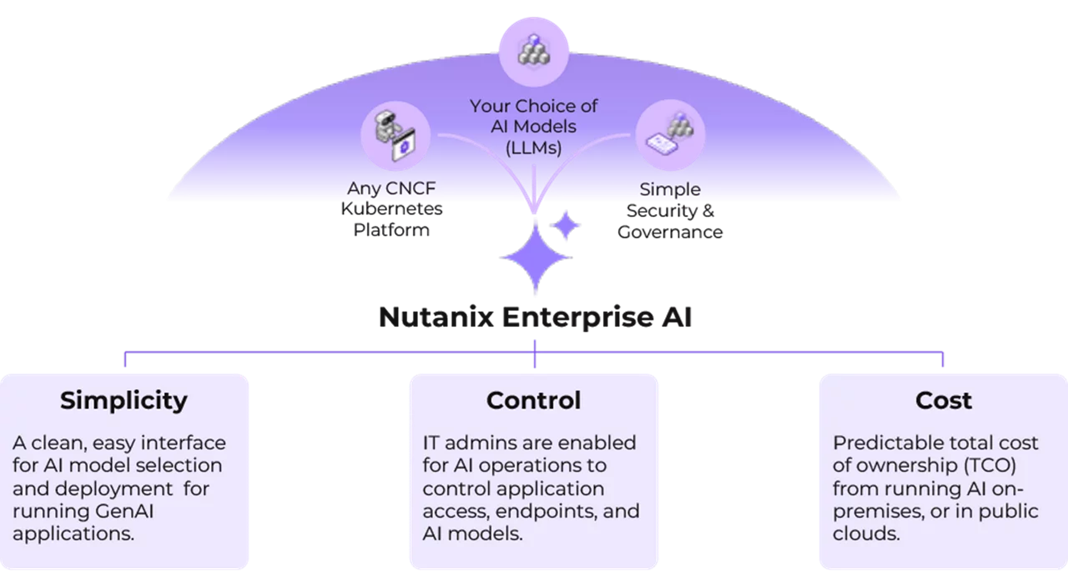

Nutanix Enterprise AI provides a flexible, integrated platform that enables enterprises to freely select and deploy the AI models (LLMs) they need in any CNCF-certified Kubernetes environment, with simple security and governance mechanisms. Its advantages include a streamlined interface that allows users to easily select and run generative AI applications; empowers IT administrators with full control over AI operations, including application access, endpoints, and model management; and achieves efficient AI cost management through predictable total cost of ownership (TCO). This allows enterprises to implement AI safely, controllably, and cost-effectively.

Advantages of Adoption

Easy Management

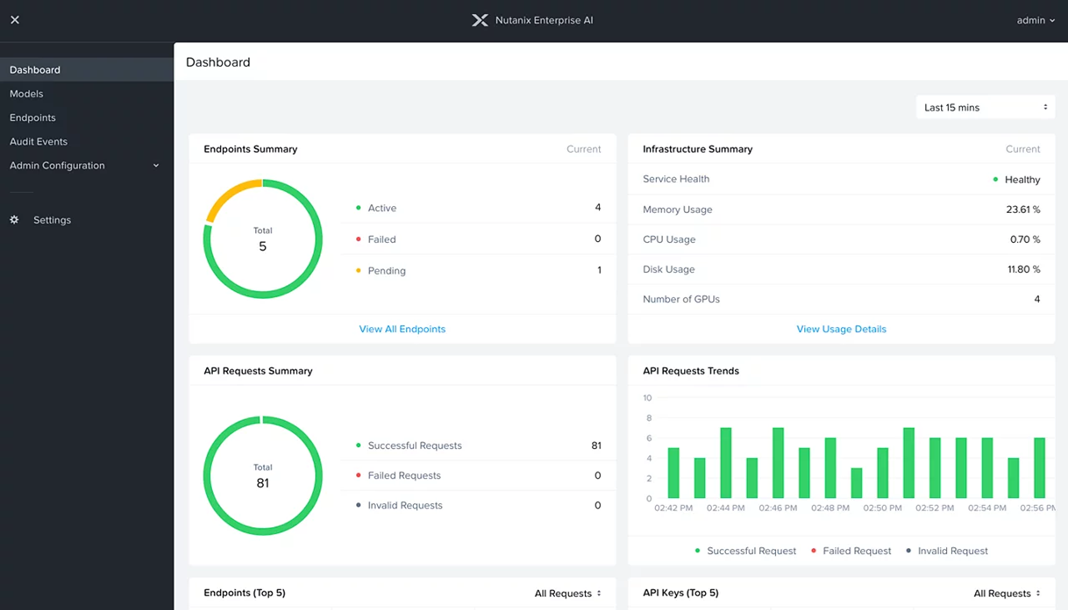

Streamlines workflows for easier monitoring and management of AI endpoints.

Rapid Deployment

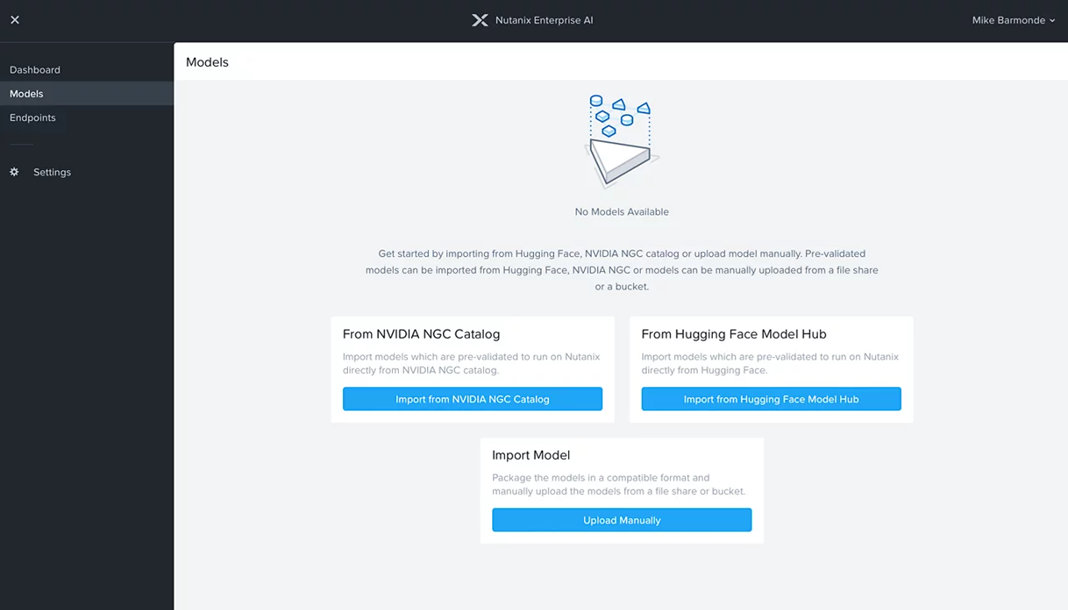

Easily deploy AI models and secure APIs via a click-based interface, with options for Hugging Face, NVIDIA NIM, or your own private models.

Reliable Environment

Run enterprise AI securely in any CNCF-certified Kubernetes environment while fully leveraging existing AI tools.

One-Stop Auditing, Monitoring, and Testing

Audit Management

- Track key events such as logins, API activities, and LLM requests.

- Centralized monitoring of Kubernetes, GPU, LLMs, and APIs.

- Visualize metrics like API request volume and Kubernetes infrastructure health via an intuitive dashboard.

AI Model Testing

- Quickly test or validate deployed AI models (LLMs) using pre-designed prompts or queries.

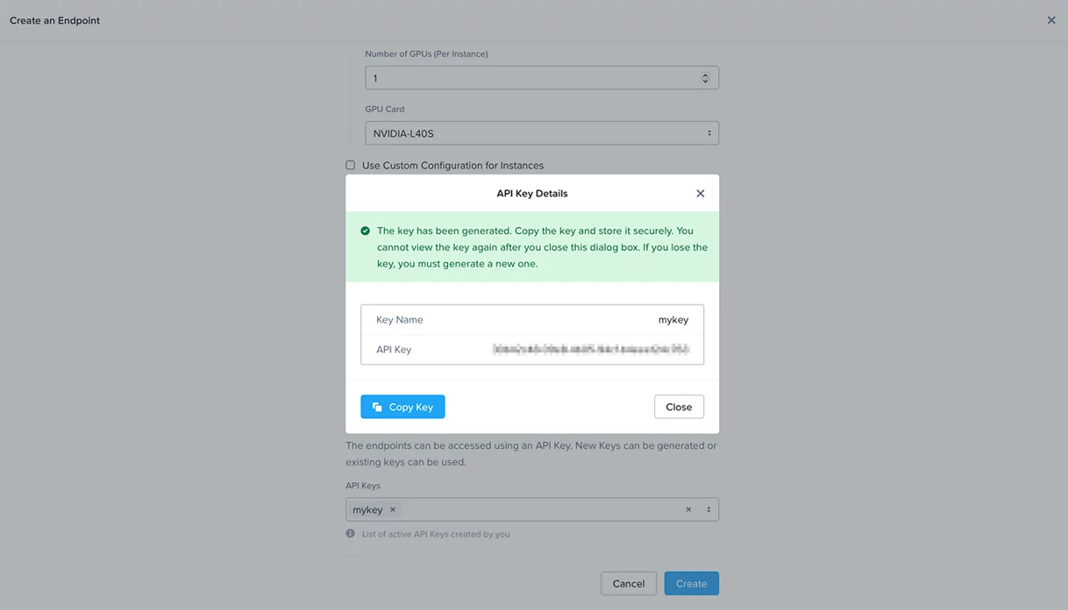

API Token Creation & Management

- Role-Based Access Control: Easily grant or revoke LLM access for developers and generative AI application owners.

- Manage Hugging Face & NVIDIA NIM Tokens: Update and store API tokens for external hubs and registries.

- API Code Examples from Endpoints: Generate URL-formatted JSON code with a click for API testing.

Freedom to Choose LLMs

- Hugging Face Model Hub: Select verified, ready-to-use LLMs from Hugging Face, including Google Gemma, Meta LLaMA, and Mistral.

- NVIDIA NIM: Deploy models like Meta LLaMA via NVIDIA NGC catalog using NVIDIA NIM.

- Custom Models: Upload your own LLMs if the required or proprietary models are not listed.